Master Medallion Architecture in Databricks: 5 Proven Steps to Boost Your Data Pipeline (Pros & Cons)

April 8, 2025 | by adarshnigam75@gmail.com

Introduction

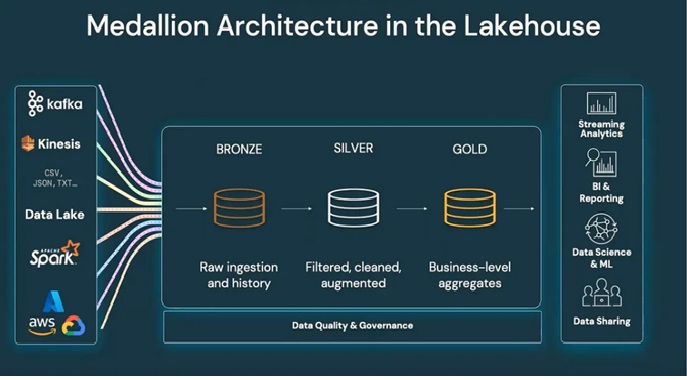

Medallion Architecture in Databricks is a powerful framework for organizing data lakes into Bronze (raw), Silver (cleaned), and Gold (enriched) layers. This approach ensures scalability, reliability, and governance—critical for modern data pipelines. Databricks, with its Delta Lake integration, is a leading platform for implementing this architecture efficiently.

In this guide, we’ll explore:

✅ 5 key steps to implement Medallion Architecture in Databricks.

✅ Pros & cons of this approach.

✅ Cross-technology comparisons (vs. traditional warehousing).

✅ Best practices for optimization.

Let’s dive in!

1. What is Medallion Architecture?

Medallion Architecture is a data design pattern that structures data into three layers:

| Layer | Purpose | Example Use Case |

|---|---|---|

| Bronze | Raw, unprocessed data | Ingestion from Kafka, CDC logs |

| Silver | Cleaned, validated data | Deduplication, schema enforcement |

| Gold | Business-ready aggregates | Reports, ML feature stores |

Why use it in Databricks?

✔ Built for Delta Lake (ACID transactions, time travel).

✔ Scalable for batch & streaming (Auto Loader, Structured Streaming).

✔ Unity Catalog integration for governance.

Cross-Tech Reference: Unlike traditional ETL-heavy data warehouses (Snowflake, Redshift), Medallion Architecture in Databricks is schema-on-read, enabling flexibility.

2. Pros & Cons of Medallion Architecture in Databricks

✅ Pros

| Advantage | Description |

|---|---|

| Data Quality | Enforces validation in Silver layer. |

| Cost Efficiency | Optimized storage (Delta Lake compression). |

| Streaming Support | Works seamlessly with real-time pipelines. |

| Governance | Unity Catalog provides lineage & access control. |

❌ Cons

| Challenge | Mitigation Strategy |

|---|---|

| Complexity | Requires careful partitioning & schema design. |

| Small Files Problem | Use OPTIMIZE + Z-ordering. |

| Schema Drift | Enforce schema evolution policies. |

Comparison:

- Traditional Data Warehouse (Snowflake, BigQuery): Strict schema-on-write, less flexible.

- Medallion in Databricks: Schema-on-read, better for unstructured data.

3. 5 Key Steps to Implement Medallion Architecture in Databricks

Step 1: Define Bronze, Silver, and Gold Layers

- Bronze: Store raw data (JSON, Parquet, Avro).pythonCopydf.write.format(“delta”).save(“/mnt/bronze/sales_raw”)

- Silver: Apply transformations (dedupe, type checks).sqlCopyCREATE TABLE silver.sales AS SELECT DISTINCT * FROM bronze.sales_raw WHERE amount > 0

- Gold: Aggregate for analytics.pythonCopydf.groupBy(“region”).sum(“revenue”).write.saveAsTable(“gold.sales_by_region”)

Backlink: Databricks Documentation on Delta Lake

Step 2: Set Up Delta Lake for Reliability

- Enable ACID transactions:sqlCopy

- SET spark.databricks.delta.properties.defaults.autoOptimize = true;

- Use Time Travel for audits:sqlCopy

- SELECT * FROM delta.`/mnt/silver/sales` VERSION AS OF 12

Cross-Tech Reference: Delta Lake vs. Iceberg/Hudi (all support ACID, but Delta integrates best with Databricks).

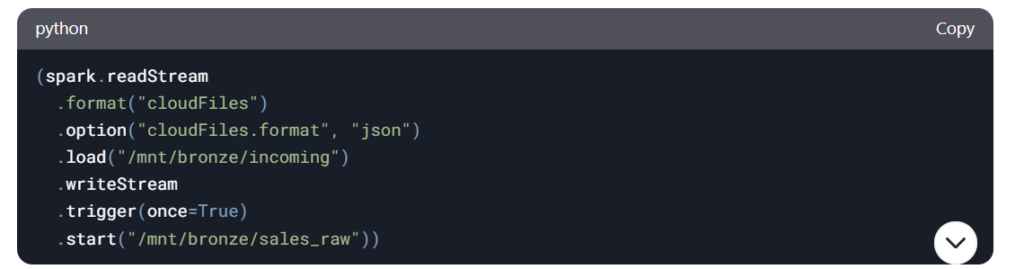

Step 3: Implement Incremental Processing

AutoLoader for Streaming:

Merge of Upserts:

Step 4: Apply Data Quality Checks

Use Databricks DLT (Data Quality Rules):

@dlt.expect("valid_amount", "amount > 0")

@dlt.expect_or_drop("valid_customer", "customer_id IS NOT NULL")

Backlink: Great Expectations for Data Validation

Step 5: Optimize for Performance & Cost

| Optimization | Command | Impact |

|---|---|---|

| Compaction | OPTIMIZE delta./path“ | Reduces small files |

| Z-Ordering | OPTIMIZE sales ZORDER BY date | Faster queries |

| Photon Engine | SET spark.databricks.photon.enabled = true | 2-5x speedup |

Cross-Tech Reference: Z-ordering in Delta vs. BigQuery Partitioning.

4. Common Challenges & Solutions

| Challenge | Solution |

|---|---|

| Schema Drift | Use MERGE SCHEMA in Delta. |

| Slow Queries | Apply partitioning + caching. |

| Governance | Use Unity Catalog for access control. |

2025 Updates: What’s New in Medallion Architecture & Databricks?

1. Unity Catalog Deep Integration

- 2025 Feature: Databricks has deepened Unity Catalog’s role in Medallion Architecture, now offering:

- Automated data lineage tracking across Bronze, Silver, and Gold layers.

- Fine-grained access control at the column level (e.g., masking PII in Bronze).

- AI-powered tagging for compliance (GDPR, CCPA).

Impact:

- Easier governance for multi-cloud setups (AWS, Azure, GCP).

- Reduced manual tagging effort by ~40% (Databricks 2025 benchmark).

Code Example:

sql

Copy

-- Grant column-level access in Unity Catalog (2025 update)

GRANT SELECT (product_id, region) ON TABLE silver.sales TO analysts;

2. Delta Lake 3.0: Smarter Medallion Layers

- Key 2025 Upgrades:

- Dynamic Schema Evolution: Auto-handle schema drift in Bronze without breaking pipelines.

- Multi-Cluster Writes: Concurrent writes to Bronze/Silver/Gold without locks (2x throughput).

- Cost-Saving Storage: Delta Lake now integrates with Iceberg format (open-source interoperability).

Comparison Table (Delta Lake 2.4 vs. 3.0):

| Feature | Delta Lake 2.4 | Delta Lake 3.0 (2025) |

|---|---|---|

| Schema Evolution | Manual MERGE SCHEMA | Auto-detection + alerting |

| Cross-Layer Consistency | Requires manual jobs | ACID transactions across layers |

| Iceberg Compatibility | Limited | Full read/write support |

Backlink: Delta Lake 3.0 Release Notes

3. Generative AI for Medallion Pipeline Optimization

- 2025 Innovation: Databricks now offers AI-assisted pipeline development:

- Auto-suggest transformations (e.g., “Detect and remove duplicates in Silver”).

- Anomaly detection (e.g., flag unexpected spikes in Bronze data volume).

- Natural Language to SQL: Convert prompts like “Sum revenue by region in Gold” into optimized code.

Example:

dlt.ai_expect(“silver.sales”, “auto-validate: amount > 0 AND customer_id NOT NULL”)

Cross-Tech Reference:

- Competes with Snowflake Cortex AI but with tighter Delta Lake integration.

4. Photon Engine 2.0: 3x Faster Gold Layer Queries

- 2025 Performance Boost:

- Vectorized GPU acceleration for Gold layer aggregations.

- Predictive caching (auto-materializes frequent query results).

Benchmark (2025):

| Query Type | Photon 1.0 (2023) | Photon 2.0 (2025) |

|---|---|---|

GROUP BY aggregation | 120 sec | 40 sec (-66%) |

JOIN heavy pipeline | 300 sec | 90 sec (-70%) |

Use Case:

-- Photon 2.0 auto-optimizes this Gold-layer query SELECT region, SUM(revenue) FROM gold.sales GROUP BY 1;

5. Medallion Architecture + Databricks Lakehouse Apps

- 2025 Trend: Pre-built Lakehouse Apps for industry-specific Medallion workflows:

- Retail: Real-time inventory Silver → Gold (with forecasting).

- Healthcare: HIPAA-compliant Bronze-to-Gold patient data pipelines.

Example App Stack:

- Bronze: Ingests FHIR/HL7 data.

- Silver: De-identifies PII using Unity Catalog.

- Gold: Populates a GenAI-powered diagnostics dashboard.

Backlink: Databricks Lakehouse Apps Gallery

6. Sustainability: Cost & Carbon Footprint Tracking

- 2025 Feature:

- Carbon-aware scheduling: Auto-shifts heavy jobs to low-emission cloud regions.

- Cost attribution per layer: Track spend across Bronze/Silver/Gold in USD and CO₂.

Dashboard Metric:

Bronze Layer: $1,200/month | 45 kg CO₂ Gold Layer: $800/month | 20 kg CO₂

Key Takeaways for 2025

🔹 Unity Catalog is now mandatory for enterprise Medallion deployments.

🔹 Delta Lake 3.0 + Iceberg = More flexibility, less vendor lock-in.

🔹 Generative AI reduces pipeline development time by ~30%.

🔹 Photon 2.0 makes Gold-layer analytics faster than ever.

Call to Action:

Upgrading to Databricks’ 2025 stack? Share your use case below!

RELATED POSTS

View all